In 2024, regulators and online service providers fully aligned around the Digital Services Act (DSA), a groundbreaking piece of legislation that regulates the provision of online services within the EU, regardless of whether the provider is based in Europe or not. This year, the focus has shifted to implementing these legislative principles in practice. In particular, measures aimed at safeguarding minors have gained significant momentum, with age verification being a key example.

The European strategy for a better internet for kids (BIK+ strategy) is the EU's guiding framework for creating a safer and better online environment for children and young people, ensuring they all equally benefit from the opportunities the internet and digital technologies have to offer while safeguarding privacy, safety and well-being. A cornerstone of the strategy and a central political priority across the EU is keeping children safe from harmful and inappropriate content. This principle has been established as a legal requirement through a series of laws at the EU level.

The wider EU legal and policy framework for age assurance at a glance

1. The Digital Services Act (DSA)

The Digital Services Act (DSA) is a comprehensive regulation aimed at improving online safety and accountability across the European Union. It applies to online platforms and intermediaries, including social networks and marketplaces. Its primary objectives are to protect users and their fundamental rights, consumer rights, and democratic values online, as well as to establish clear accountability for online platforms while fostering a competitive digital market and preventing illegal content and harmful activities online.

A key focus of the DSA is the protection of minors, with specific articles outlining required measures for online platforms accessed by children. Providers of online platforms must ensure high levels of privacy, safety, and security for minors online and avoid profiling-based ads when targeting this age group (DSA Art. 28). The largest platforms that have more than 45 million monthly users in the EU must also conduct thorough risk assessments related to systemic risks that may affect children's well-being (DSA Art. 34). Additionally, these platforms are required to implement tailored mitigation strategies to address identified risks, which may include changes in design, content moderation, and the availability of supportive tools for minors (DSA Art. 35).

2. The Digital Fairness Act

The Digital Fairness Act will strengthen protection and digital fairness for consumers in areas that are not covered by the DSA, while ensuring a level playing field and simplifying rules for businesses in the EU. It will address specific challenges as well as harmful practices that consumers face online, such as deceptive or manipulative interface design, misleading marketing by social media influencers, addictive design of digital products and unfair personalisation practices, especially where consumer vulnerabilities are exploited for commercial purposes. Young people are an important consumer segment with specific consumption patterns and often act as early adopters of new technologies and digital products. The Digital Fairness Act will pay particular attention to the protection of minors online. A call for evidence and a public consultation about this initiative are currently underway.

3. The General Data Protection Regulation (GDPR)

The application of the General Data Protection Regulation (GDPR) in 2018 marked a significant step toward protecting children’s data online. Concerning the information society services, such as social networking sites, platforms for downloading music or online gaming, GDPR Art. 8 sets 16 as the age for consent. EU Member States can lower this minimum age threshold for such digital consent as low as 13 years. If a child does not yet have the legal age of digital consent, the processing of their personal data, and with that their access to digital services and platforms online, requires parental consent.

GDPR recognises that children merit specific protection with regard to their personal data. This means that even where there are no specific rules about children's data processing, the controllers must make sure that children's data are well protected. This also applies in the case of age assurance tools that process children’s personal data. In 2025, the EDPB issued the statement on GDPR and age assurance.

4. The Audiovisual Media Services Directive (AVMSD)

Under Article 28b of the AVMSD, which was last reviewed in 2018, video-sharing platforms (a specific category of online platforms), irrespective of their size, are required to take appropriate measures to protect minors from programmes, user-generated videos and audiovisual commercial communications which may impair their physical, mental or moral development. In particular, the most harmful content shall be subject to the strictest control measures. Such measures include establishing and operating age verification systems.

5. BIK Guide to age assurance

This resource, hosted on the Better Internet for Kids (BIK) website, highlights age assurance, bringing together a range of materials that outline current policy approaches to age assurance. Materials include a research report that maps age assurance typologies and requirements, a toolkit and easy-to-read explainers to help raise awareness of age assurance approaches in educational and family settings, and resources aimed at digital service providers to assist them in checking their own compliance, including a self-assessment tool.

In 2024, the European Commission published the report 'Mapping age assurance typologies and requirements', which provides an overview of the legal framework, ethical requirements, and typologies of age assurance.

It also explains important terminology such as 'age assurance', 'age verification', ‘age estimation’, ‘self-estimation’ and 'age-appropriate design'. The report emphasises the importance of a context-sensitive, child-rights-based and proportionate approach to age assurance.

The DSA and the protection of minors: age verification as vital solution

The Digital Services Act (DSA) regulates online platforms of all sizes within the EU, including those designated as very large online platforms and search engines. The BIK+ strategy and the DSA work seamlessly together to create safer and better online environments for everyone, especially children and young people, who are the most vulnerable groups accessing the internet.

To provide further guidance to online service providers, the EU has published Guidelines on the protection of minors under the DSA. These guidelines enshrine a privacy-, safety- and security-by-design approach and are grounded in children’s rights. They also set out a non-exhaustive list of measures to protect children from online risks such as grooming, harmful content, problematic and addictive behaviours, as well as cyberbullying and harmful commercial practices. The guidelines also recommend that online platforms put effective age verification methods in place to restrict access to adult content such as pornography and gambling.

When it comes to enforcement, national Digital Service Coordinators (DSCs) are tasked with supervising, enforcing, and monitoring the DSA for all providers operating within their territory. For the very large online platforms and search engines, the responsibility is shared between the DSCs and the European Commission.

The Commission is also supporting other measures on EU level to keep children safe online. One example of this support is the recently released EU age verification blueprint that provides the technical basis for a user-friendly and privacy-preserving age verification method across the EU. This open-source technology aims to enable users to prove they are over the age of 18 when accessing adult-restricted content, without having to reveal any other personal information. It has been designed to be robust, user-friendly, and privacy-preserving, and will be interoperable with future European Digital Identity (EUDI) Wallets, which Member States are encouraged to make available for minors in their countries. Five countries – Denmark, France, Greece, Italy and Spain – will be the first to test the technical solution before releasing national age verification apps.

Age assurance is an umbrella term for the methods that are used to determine the age or age range of an individual to varying levels of confidence or certainty.

Age verification is 'a system that relies on hard (physical) identifiers and/or verified sources of identification that provide a high degree of certainty in determining the age of a user. It can establish the identity of a user but can also be used to establish [whether the user is over a certain minimum or under a certain maximum] age only'.

Definition of relevant terminology from the EC's 'Mapping age assurance typologies and requirements', February 2024.

National approaches to age verification

Complementary to the EU-level approaches to the protection of children and young people online, the EU Member States support child online safety initiatives by closing any possible legal gaps on the national level and implementing activities and measures that foster a safer and better internet for children.

The annual BIK Policy monitor provides a comprehensive review of the implementation of the BIK+ strategy across all EU Member States, as well as Iceland and Norway. It offers an overview of relevant policy actions and legislation at play at the country level.

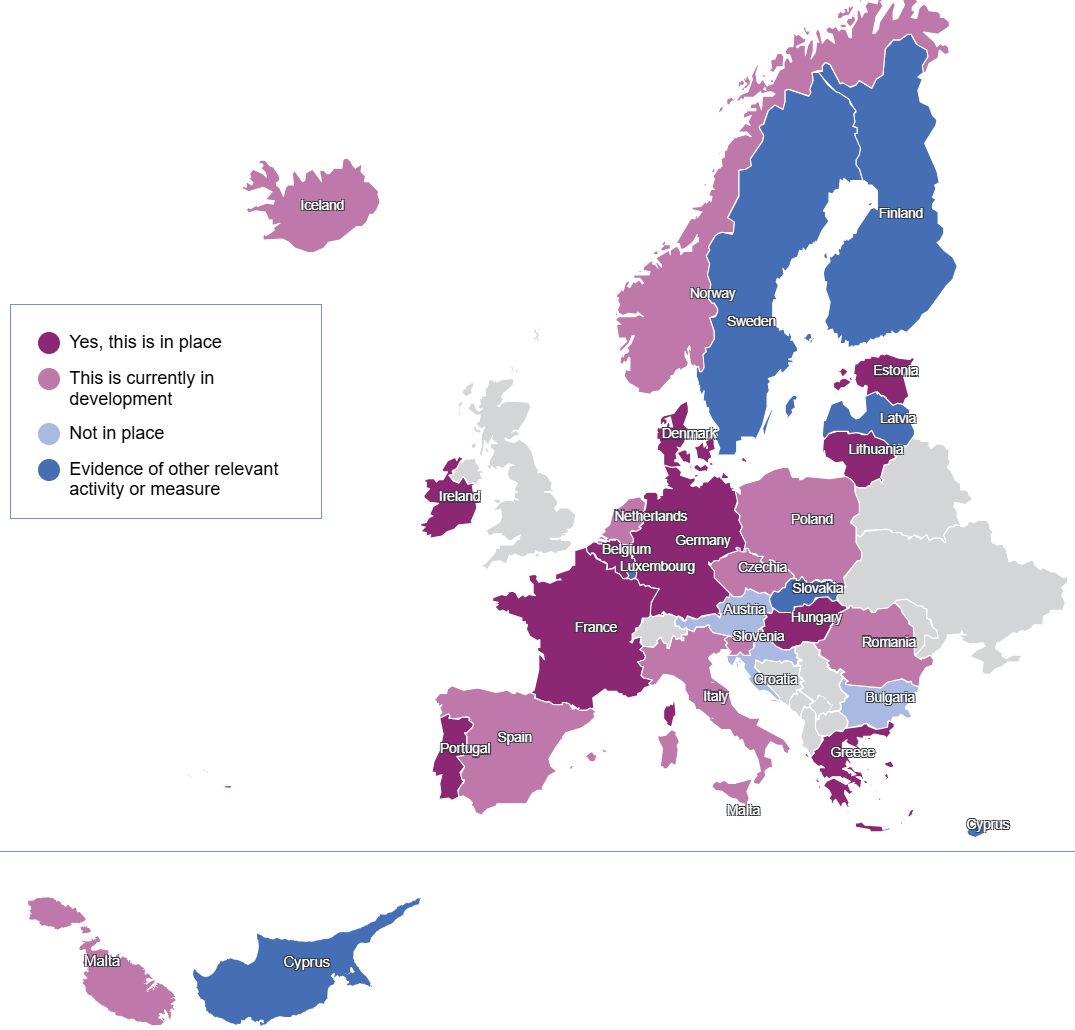

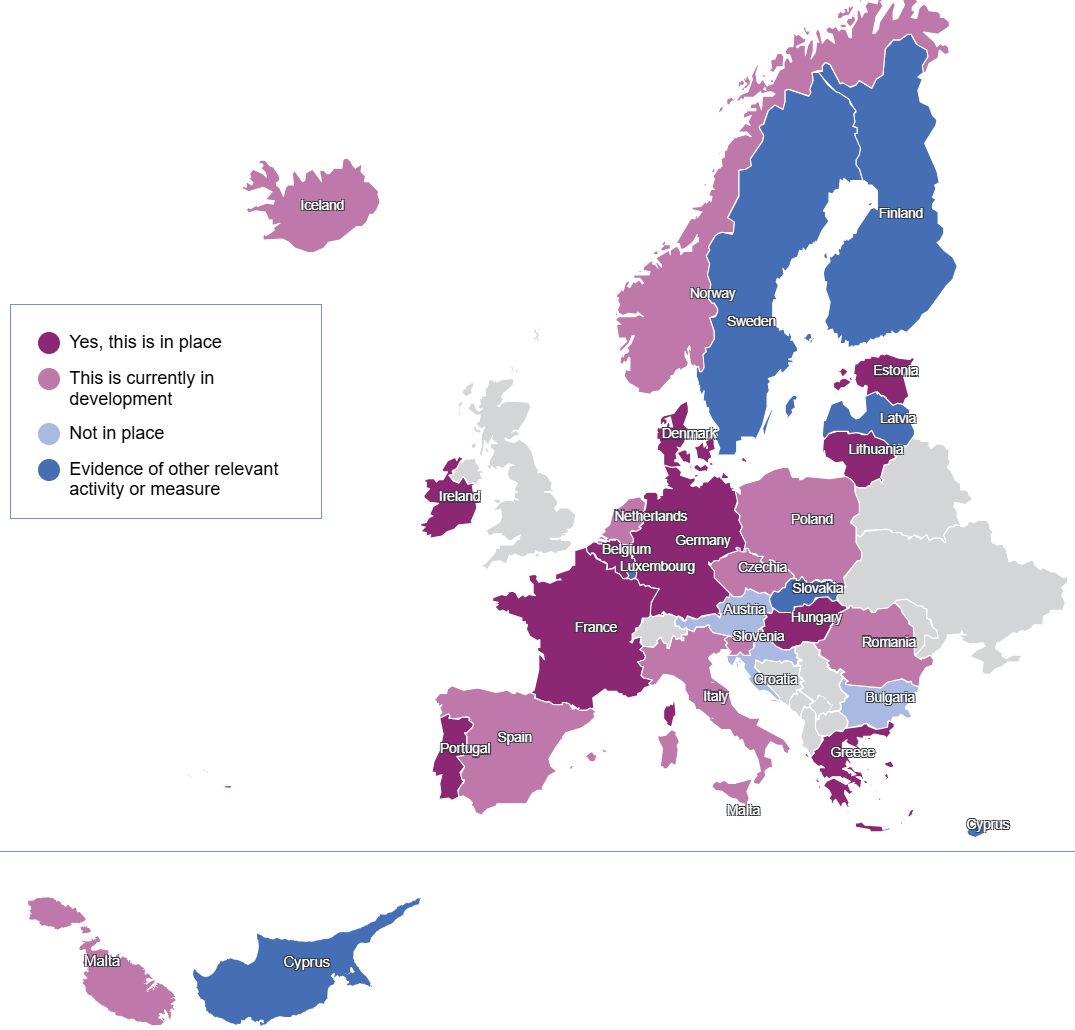

Concerning the protection of children and young people online by implementing age verification mechanisms, the 2025 BIK Policy monitor survey asked countries to report on whether there is a national law or policy in place that requires age verification mechanisms to prevent minors from accessing adult (over-18) online content or other restricted online services.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.8-Laws regarding age verification').

Ten of the 29 surveyed countries reported having national policies or regulations in place that address age verification requirements, with 10 further countries reporting such measures currently being in development (2025 BIK Policy monitor report, pp. 63-65). This demonstrates a significant increase compared to the 2024 iteration of the BIK Policy monitor, where only four countries reported national laws or policies that mandate age verification (pp.87-88). I

As detailed in the 2025 BIK Policy monitor country profile for France, the country has adopted the 'SREN law' (July 2024) that mandates age verification for adult content and tightens platform liability regarding online platforms and service providers. In January 2025, the French regulatory authority for audiovisual and digital communication, ARCOM, released a statutory technical standard for age verification systems, which mandates online services distributing pornographic content to implement reliable age verification systems, preventing minors' access. In addition, France is developing its national EU Digital Identity Wallet in alignment with EU regulations, intending to make it available to all citizens by the EU's 2026 deadline.

Germany has a history of implementing innovative regulatory strategies to protect minors from inappropriate online content and restricted services. As outlined in the German 2025 BIK Policy monitor country profile, the Interstate Treaty on the Protection of Minors in the Media (which first entered into force on 1 April 2003) prohibits telemedia from offering pornographic content if the provider is unable to ensure that children and young people cannot access these sites. In Germany, age verification is also considered a possible measure to enhance minors' protection online under the DSA. Online platforms and service providers accessible to minors in Germany are checked by the Federal Agency for Child and Youth Protection in the Media to ensure they have taken suitable measures to ensure adult content and restricted services are not accessible to minors. In parallel, the Federal Ministry for Family Affairs, Senior Citizens, Women, and Youth is working on a data-saving method for age verification, which is likely to work with the German eID card, currently available to all national ID card holders over the age of 16.

In Ireland, as highlighted in the Irish 2025 BIK Policy monitor country profile, Part A of its Online Safety Code contains a general obligation for online platforms and service providers to establish and operate age verification systems for service users with respect to content which may impair the physical, mental, or moral development of minors. Furthermore, the Irish Coimisiún na Meán has been intensively collaborating nationally and at EU-level in relation to age verification, including in the context of the recently released EU age verification blueprint, both in terms of the necessary technical specifications and the development of the application enabling such verification.

The EU way to foster effective age verification

As recommended by the 2025 State of the Digital Decade report, Member States are encouraged to "implement the harmonised EU age verification solution [the EU age verification blueprint] in the national EU Digital Identity (EUDI) wallets, including systems for issuing proof-of-age attestations, and accelerate the issuance of electronic means of identification to minors" (2025 State of the Digital Decade report, Annex 1, p. 31).

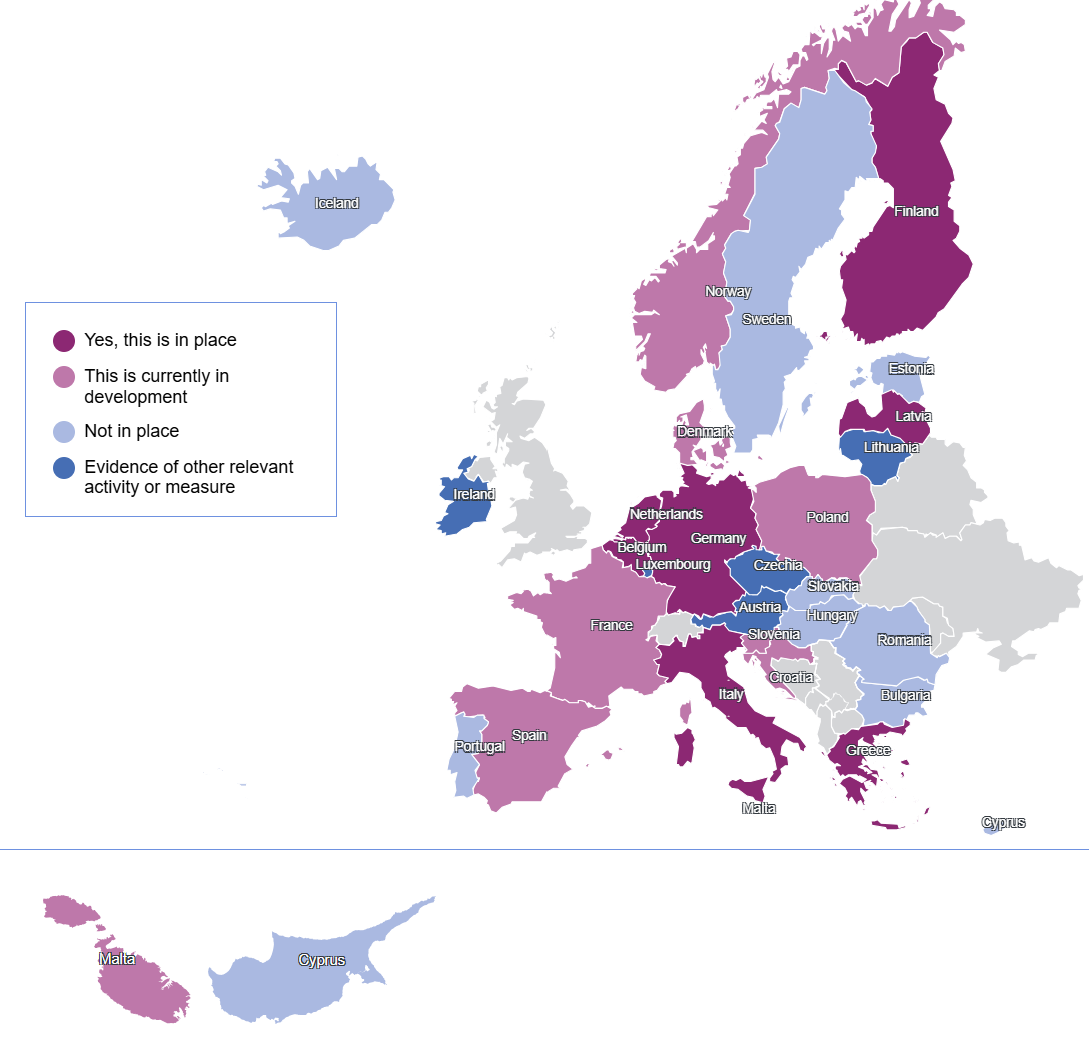

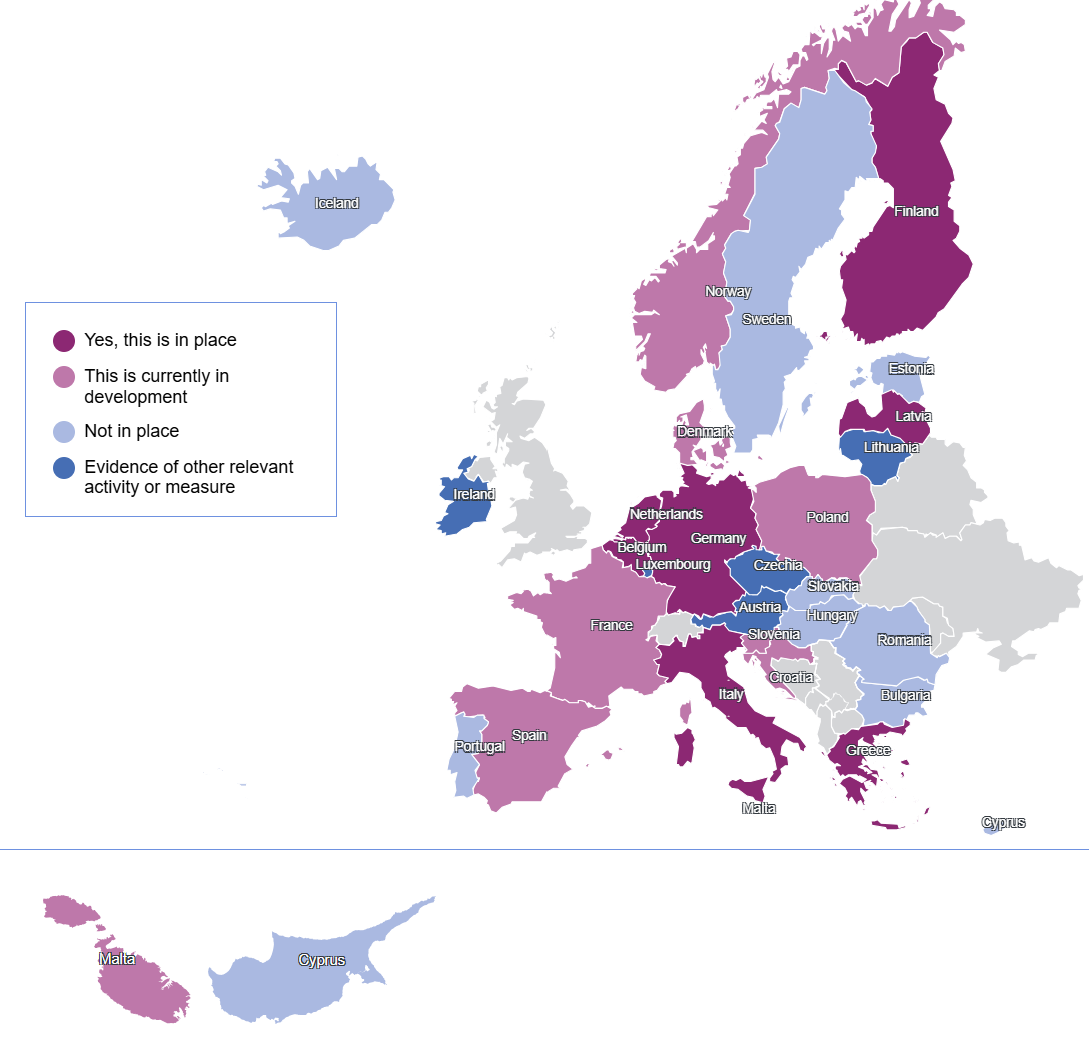

The 2025 BIK Policy monitor questionnaire also enquires whether surveyed countries (EU27 + Iceland and Norway) have or are planning to implement EUDI wallets for minors. Seven countries reported that this was already in place, and eight further countries indicated concrete plans to make the EUDI wallet available for minors in their country.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.9-EU Digital Identity Wallet (EUDI)).

The primary trend in digital identity systems for minors emphasises using existing national infrastructures while ensuring proper age verification, according to the EUDI initiative. Several countries are adapting or expanding their current national digital identity solutions for minors.

For instance, Austria, which has reported 'other relevant measures' in relation to the question about plans to implement the EUDI wallet for minors, allows those over 14 to apply for ID Austria, while also offering digital proof of age and digital school ID cards. On the other hand, Belgium's national digital identity wallet, MyGov.be, is available to all citizens, including minors. In other countries, including Lithuania, there are national tools available for verifying one's identity, which teenagers can use with parental consent. Many other countries, including Spain, the Czech Republic, Estonia, Ireland, Poland, and Sweden, explicitly reported their participation in collaboration, pilot projects and other ongoing efforts related to the EU's EUDI Regulation.

A roadmap to success – if done carefully

The European Union and its Member States are moving quickly, while paying close attention to privacy-preserving design and careful implementation of age verification methods compatible with digital wallet systems that assert age without exposing identity. All these approaches must be fully aligned with the DSA’s proportionate-measures ethos and give platforms a credible path to compliance that does not require collecting sensitive data at scale.

Looking ahead, the convergence of regulatory pressure, not least via the DSA, EU-level guidance, and national innovation, is likely to accelerate the uptake of more sophisticated age assurance methods and age verification systems. Yet the path forward must be both rights-respecting and user-centric to lead to success.

Interested in more?

If you are interested in more policy insight, explore the BIK Knowledge hub, including the BIK Policy monitor, the Rules and guidelines and Research and reports directories, where we draw together relevant policy instruments, reports and research informing the implementation of the BIK+ strategy at the national level.

Find more BIK Knowledge hub insights articles here.

Finally, don't forget to read the BIK Age assurance guide on our platform for more information on how different methods of age assurance work.

In 2024, regulators and online service providers fully aligned around the Digital Services Act (DSA), a groundbreaking piece of legislation that regulates the provision of online services within the EU, regardless of whether the provider is based in Europe or not. This year, the focus has shifted to implementing these legislative principles in practice. In particular, measures aimed at safeguarding minors have gained significant momentum, with age verification being a key example.

The European strategy for a better internet for kids (BIK+ strategy) is the EU's guiding framework for creating a safer and better online environment for children and young people, ensuring they all equally benefit from the opportunities the internet and digital technologies have to offer while safeguarding privacy, safety and well-being. A cornerstone of the strategy and a central political priority across the EU is keeping children safe from harmful and inappropriate content. This principle has been established as a legal requirement through a series of laws at the EU level.

The wider EU legal and policy framework for age assurance at a glance

1. The Digital Services Act (DSA)

The Digital Services Act (DSA) is a comprehensive regulation aimed at improving online safety and accountability across the European Union. It applies to online platforms and intermediaries, including social networks and marketplaces. Its primary objectives are to protect users and their fundamental rights, consumer rights, and democratic values online, as well as to establish clear accountability for online platforms while fostering a competitive digital market and preventing illegal content and harmful activities online.

A key focus of the DSA is the protection of minors, with specific articles outlining required measures for online platforms accessed by children. Providers of online platforms must ensure high levels of privacy, safety, and security for minors online and avoid profiling-based ads when targeting this age group (DSA Art. 28). The largest platforms that have more than 45 million monthly users in the EU must also conduct thorough risk assessments related to systemic risks that may affect children's well-being (DSA Art. 34). Additionally, these platforms are required to implement tailored mitigation strategies to address identified risks, which may include changes in design, content moderation, and the availability of supportive tools for minors (DSA Art. 35).

2. The Digital Fairness Act

The Digital Fairness Act will strengthen protection and digital fairness for consumers in areas that are not covered by the DSA, while ensuring a level playing field and simplifying rules for businesses in the EU. It will address specific challenges as well as harmful practices that consumers face online, such as deceptive or manipulative interface design, misleading marketing by social media influencers, addictive design of digital products and unfair personalisation practices, especially where consumer vulnerabilities are exploited for commercial purposes. Young people are an important consumer segment with specific consumption patterns and often act as early adopters of new technologies and digital products. The Digital Fairness Act will pay particular attention to the protection of minors online. A call for evidence and a public consultation about this initiative are currently underway.

3. The General Data Protection Regulation (GDPR)

The application of the General Data Protection Regulation (GDPR) in 2018 marked a significant step toward protecting children’s data online. Concerning the information society services, such as social networking sites, platforms for downloading music or online gaming, GDPR Art. 8 sets 16 as the age for consent. EU Member States can lower this minimum age threshold for such digital consent as low as 13 years. If a child does not yet have the legal age of digital consent, the processing of their personal data, and with that their access to digital services and platforms online, requires parental consent.

GDPR recognises that children merit specific protection with regard to their personal data. This means that even where there are no specific rules about children's data processing, the controllers must make sure that children's data are well protected. This also applies in the case of age assurance tools that process children’s personal data. In 2025, the EDPB issued the statement on GDPR and age assurance.

4. The Audiovisual Media Services Directive (AVMSD)

Under Article 28b of the AVMSD, which was last reviewed in 2018, video-sharing platforms (a specific category of online platforms), irrespective of their size, are required to take appropriate measures to protect minors from programmes, user-generated videos and audiovisual commercial communications which may impair their physical, mental or moral development. In particular, the most harmful content shall be subject to the strictest control measures. Such measures include establishing and operating age verification systems.

5. BIK Guide to age assurance

This resource, hosted on the Better Internet for Kids (BIK) website, highlights age assurance, bringing together a range of materials that outline current policy approaches to age assurance. Materials include a research report that maps age assurance typologies and requirements, a toolkit and easy-to-read explainers to help raise awareness of age assurance approaches in educational and family settings, and resources aimed at digital service providers to assist them in checking their own compliance, including a self-assessment tool.

In 2024, the European Commission published the report 'Mapping age assurance typologies and requirements', which provides an overview of the legal framework, ethical requirements, and typologies of age assurance.

It also explains important terminology such as 'age assurance', 'age verification', ‘age estimation’, ‘self-estimation’ and 'age-appropriate design'. The report emphasises the importance of a context-sensitive, child-rights-based and proportionate approach to age assurance.

The DSA and the protection of minors: age verification as vital solution

The Digital Services Act (DSA) regulates online platforms of all sizes within the EU, including those designated as very large online platforms and search engines. The BIK+ strategy and the DSA work seamlessly together to create safer and better online environments for everyone, especially children and young people, who are the most vulnerable groups accessing the internet.

To provide further guidance to online service providers, the EU has published Guidelines on the protection of minors under the DSA. These guidelines enshrine a privacy-, safety- and security-by-design approach and are grounded in children’s rights. They also set out a non-exhaustive list of measures to protect children from online risks such as grooming, harmful content, problematic and addictive behaviours, as well as cyberbullying and harmful commercial practices. The guidelines also recommend that online platforms put effective age verification methods in place to restrict access to adult content such as pornography and gambling.

When it comes to enforcement, national Digital Service Coordinators (DSCs) are tasked with supervising, enforcing, and monitoring the DSA for all providers operating within their territory. For the very large online platforms and search engines, the responsibility is shared between the DSCs and the European Commission.

The Commission is also supporting other measures on EU level to keep children safe online. One example of this support is the recently released EU age verification blueprint that provides the technical basis for a user-friendly and privacy-preserving age verification method across the EU. This open-source technology aims to enable users to prove they are over the age of 18 when accessing adult-restricted content, without having to reveal any other personal information. It has been designed to be robust, user-friendly, and privacy-preserving, and will be interoperable with future European Digital Identity (EUDI) Wallets, which Member States are encouraged to make available for minors in their countries. Five countries – Denmark, France, Greece, Italy and Spain – will be the first to test the technical solution before releasing national age verification apps.

Age assurance is an umbrella term for the methods that are used to determine the age or age range of an individual to varying levels of confidence or certainty.

Age verification is 'a system that relies on hard (physical) identifiers and/or verified sources of identification that provide a high degree of certainty in determining the age of a user. It can establish the identity of a user but can also be used to establish [whether the user is over a certain minimum or under a certain maximum] age only'.

Definition of relevant terminology from the EC's 'Mapping age assurance typologies and requirements', February 2024.

National approaches to age verification

Complementary to the EU-level approaches to the protection of children and young people online, the EU Member States support child online safety initiatives by closing any possible legal gaps on the national level and implementing activities and measures that foster a safer and better internet for children.

The annual BIK Policy monitor provides a comprehensive review of the implementation of the BIK+ strategy across all EU Member States, as well as Iceland and Norway. It offers an overview of relevant policy actions and legislation at play at the country level.

Concerning the protection of children and young people online by implementing age verification mechanisms, the 2025 BIK Policy monitor survey asked countries to report on whether there is a national law or policy in place that requires age verification mechanisms to prevent minors from accessing adult (over-18) online content or other restricted online services.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.8-Laws regarding age verification').

Ten of the 29 surveyed countries reported having national policies or regulations in place that address age verification requirements, with 10 further countries reporting such measures currently being in development (2025 BIK Policy monitor report, pp. 63-65). This demonstrates a significant increase compared to the 2024 iteration of the BIK Policy monitor, where only four countries reported national laws or policies that mandate age verification (pp.87-88). I

As detailed in the 2025 BIK Policy monitor country profile for France, the country has adopted the 'SREN law' (July 2024) that mandates age verification for adult content and tightens platform liability regarding online platforms and service providers. In January 2025, the French regulatory authority for audiovisual and digital communication, ARCOM, released a statutory technical standard for age verification systems, which mandates online services distributing pornographic content to implement reliable age verification systems, preventing minors' access. In addition, France is developing its national EU Digital Identity Wallet in alignment with EU regulations, intending to make it available to all citizens by the EU's 2026 deadline.

Germany has a history of implementing innovative regulatory strategies to protect minors from inappropriate online content and restricted services. As outlined in the German 2025 BIK Policy monitor country profile, the Interstate Treaty on the Protection of Minors in the Media (which first entered into force on 1 April 2003) prohibits telemedia from offering pornographic content if the provider is unable to ensure that children and young people cannot access these sites. In Germany, age verification is also considered a possible measure to enhance minors' protection online under the DSA. Online platforms and service providers accessible to minors in Germany are checked by the Federal Agency for Child and Youth Protection in the Media to ensure they have taken suitable measures to ensure adult content and restricted services are not accessible to minors. In parallel, the Federal Ministry for Family Affairs, Senior Citizens, Women, and Youth is working on a data-saving method for age verification, which is likely to work with the German eID card, currently available to all national ID card holders over the age of 16.

In Ireland, as highlighted in the Irish 2025 BIK Policy monitor country profile, Part A of its Online Safety Code contains a general obligation for online platforms and service providers to establish and operate age verification systems for service users with respect to content which may impair the physical, mental, or moral development of minors. Furthermore, the Irish Coimisiún na Meán has been intensively collaborating nationally and at EU-level in relation to age verification, including in the context of the recently released EU age verification blueprint, both in terms of the necessary technical specifications and the development of the application enabling such verification.

The EU way to foster effective age verification

As recommended by the 2025 State of the Digital Decade report, Member States are encouraged to "implement the harmonised EU age verification solution [the EU age verification blueprint] in the national EU Digital Identity (EUDI) wallets, including systems for issuing proof-of-age attestations, and accelerate the issuance of electronic means of identification to minors" (2025 State of the Digital Decade report, Annex 1, p. 31).

The 2025 BIK Policy monitor questionnaire also enquires whether surveyed countries (EU27 + Iceland and Norway) have or are planning to implement EUDI wallets for minors. Seven countries reported that this was already in place, and eight further countries indicated concrete plans to make the EUDI wallet available for minors in their country.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.9-EU Digital Identity Wallet (EUDI)).

The primary trend in digital identity systems for minors emphasises using existing national infrastructures while ensuring proper age verification, according to the EUDI initiative. Several countries are adapting or expanding their current national digital identity solutions for minors.

For instance, Austria, which has reported 'other relevant measures' in relation to the question about plans to implement the EUDI wallet for minors, allows those over 14 to apply for ID Austria, while also offering digital proof of age and digital school ID cards. On the other hand, Belgium's national digital identity wallet, MyGov.be, is available to all citizens, including minors. In other countries, including Lithuania, there are national tools available for verifying one's identity, which teenagers can use with parental consent. Many other countries, including Spain, the Czech Republic, Estonia, Ireland, Poland, and Sweden, explicitly reported their participation in collaboration, pilot projects and other ongoing efforts related to the EU's EUDI Regulation.

A roadmap to success – if done carefully

The European Union and its Member States are moving quickly, while paying close attention to privacy-preserving design and careful implementation of age verification methods compatible with digital wallet systems that assert age without exposing identity. All these approaches must be fully aligned with the DSA’s proportionate-measures ethos and give platforms a credible path to compliance that does not require collecting sensitive data at scale.

Looking ahead, the convergence of regulatory pressure, not least via the DSA, EU-level guidance, and national innovation, is likely to accelerate the uptake of more sophisticated age assurance methods and age verification systems. Yet the path forward must be both rights-respecting and user-centric to lead to success.

Interested in more?

If you are interested in more policy insight, explore the BIK Knowledge hub, including the BIK Policy monitor, the Rules and guidelines and Research and reports directories, where we draw together relevant policy instruments, reports and research informing the implementation of the BIK+ strategy at the national level.

Find more BIK Knowledge hub insights articles here.

Finally, don't forget to read the BIK Age assurance guide on our platform for more information on how different methods of age assurance work.

- < Previous article

- Next article >