The internet is a big part of everyday life for young people, whether they're learning, playing, creating, or simply staying in touch with friends. However, as digital spaces become increasingly central to how children grow up, it's equally important that these spaces are safe, respectful, and designed with their rights and needs in mind. That’s precisely where the Digital Services Act (DSA) comes in.

The Digital Services Act (DSA) is a groundbreaking piece of legislation that regulates the provision of online services within the EU, regardless of whether the provider is based in Europe or not. It covers all intermediaries, from large social media platforms like Instagram and TikTok to medium-sized online marketplaces, as well as search engines like Google and Bing. Its primary objective is to enhance online safety by preventing illegal and harmful activities and the spread of disinformation while safeguarding user rights and privacy. The DSA emphasises the protection of children and sets clear responsibilities for online platforms to ensure user safety and a fair digital environment.

In the booklet “The DSA explained”, the European Commission highlights, in family-friendly format, and in all EU languages, the most relevant aspects of the DSA for children and young people.

Putting children's safety, empowerment and participation first

One key objective of the DSA is to protect people under the age of 18. In particular, Article 28 of the DSA prohibits online platforms from presenting ads to minors that are based on profiling and requires platforms accessible to minors to take appropriate and proportionate measures to protect their privacy, safety, and security. Implementing such measures could mean, for instance,

- setting minors' accounts to private by default,

- modifying the platforms' recommender systems ('algorithms') to lower the risks of children and young people encountering harmful content,

- disabling features that contribute to excessive use, and

- implementing effective age assurance methods to reduce the risks of children being exposed to pornography or other age-inappropriate content.

In July 2025, the European Commission (EC) published guidelines to support online platforms that are accessible to minors in implementing appropriate and proportionate measures to protect children online as required under DSA Art. 28. The guidelines outline key actions to be taken by platforms across the EU to enhance the protection of minors more consistently and help companies in developing and adapting the services used by minors to fully respect their rights online.

Making the online world safer, easier to understand and navigate

The EC also released an EU age verification blueprint that provides the technical basis for a user-friendly and privacy-preserving age verification method across the EU. The blueprint is currently being tested and can be customised to national legal and language requirements. It is expected to be adopted and published as an app through the app stores by Member States and/or industry players.

This open-source technology aims to enable users to prove they are over the age of 18 when accessing adult-restricted content, without having to reveal any other personal information. It has been designed to be robust, user-friendly, and privacy-preserving, and will work together seamlessly with future European Digital Identity Wallets. It will technically also be possible to extend the age verification solution to other age limits.

The DSA also requires platforms to make their terms and conditions easier to read and understand, especially for younger users. This will help users to make informed choices about how they use platforms and what information they share online, among other things. The first evaluation of the European strategy for a better internet for kids (BIK+ strategy), which also involved children and young people, has shown that, so far, this practice is far from common.

Helping children and young people to report if something goes wrong online

If something goes wrong, such as seeing something inappropriate or experiencing online abuse, the Commission’s guidelines on the protection of minors under the DSA state that platforms should provide clear and simple ways to find support. Alongside these platform responsibilities, European countries are also stepping up efforts to tighten the safety net further, helping to keep children and young people safe online by establishing national complaint mechanisms.

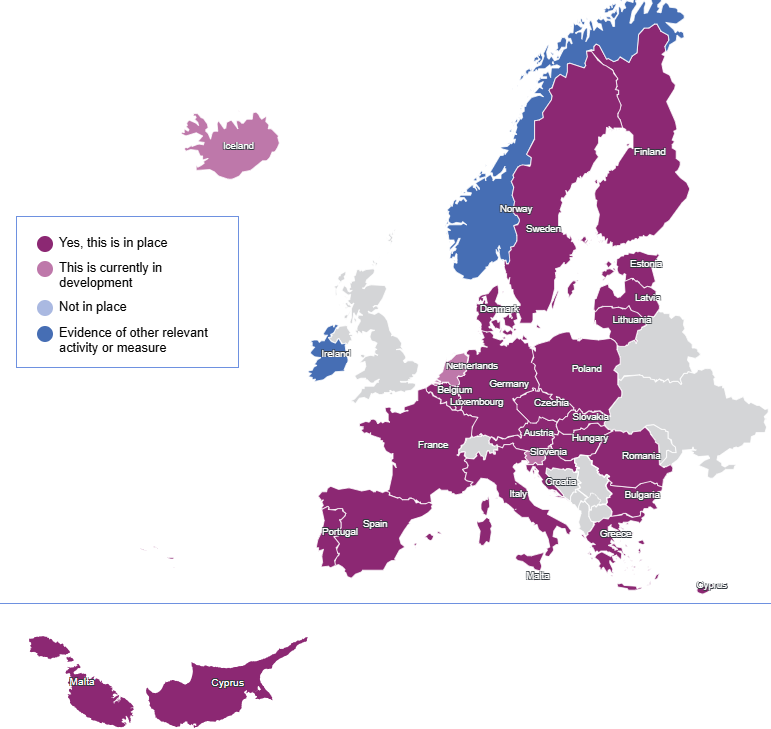

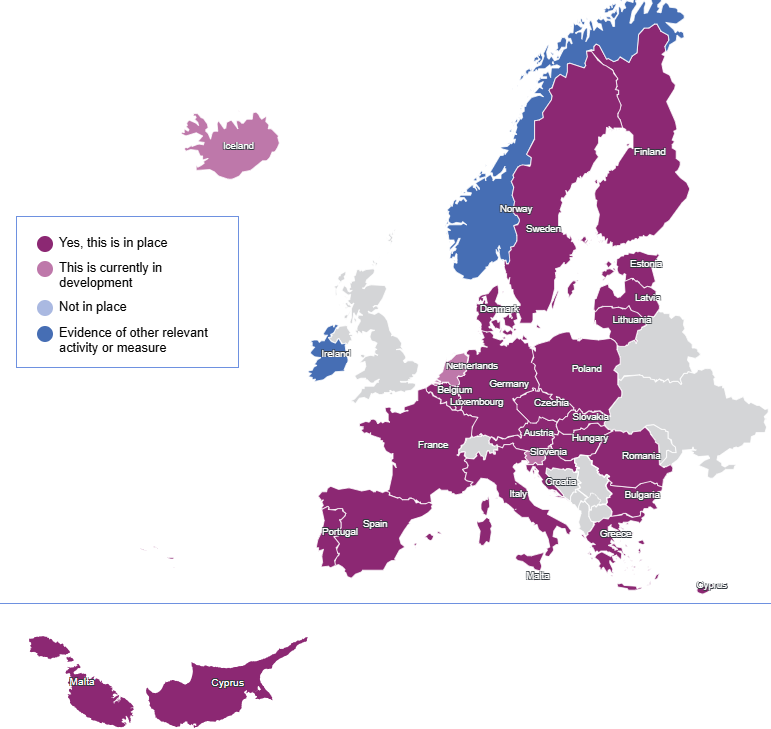

The 2025 edition of the BIK Policy monitor, the annual policy review activity that investigates the implementation of the BIK+ strategy across EU Member States, Iceland, and Norway, shows that European countries are making good progress, too. Out of 29 countries surveyed, 27 have either established or are currently developing formal processes that allow individuals, including children and young people, and organisations to file complaints about harmful online content, cyberbullying and other safety issues. Two more countries report having other measures in place to help with these concerns (BIK Policy monitor report 2025, pp. 59-61). It is important to note that these national complaint mechanisms are designed to complement, not replace, the reporting systems for illegal content that online platforms are required to provide under the DSA.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.5-Complaints handling mechanism').

Children and young users should be able to find help quickly and know their complaints will be taken seriously. Entities that have been awarded DSA trusted flagger status may help providers of online platforms tackle illegal content faster, as trusted flaggers' notices of such content must be dealt with priority by online platforms and service providers. Many members of the EC co-funded Insafe-INHOPE network of Safer Internet Centres have already been awarded trusted flagger status. A continuously updated list of entities awarded trusted flagger status can be found European Commission’s website.

Applying and enforcing the rules

Of course, setting rules only matters if someone is making sure they’re followed. That’s why the DSA gives both the European Commission and national authorities (the Digital Services Coordinators, DSCs) new powers to supervise if platforms comply with the regulation.

At the European level, the EC is responsible for the supervision and enforcement in respect of providers of very large online platforms (VLOPSs) and very large online search engines (VLOSEs). For this purpose, it can, for example, conduct investigations, inspections, or request information from these providers. If they are found to break the rules, the EC can issue sanctions such as fines of up to six per cent of their annual global turnover.

Some companies have already made changes to comply. For instance, many platforms have started limiting how they show ads to younger users, while others have set the default settings for users under 16 years to private, meaning only approved contacts can view what they post.

At the same time, several platforms are also under formal investigation by the EC or the respective national authorities for failing to adequately protect children and young people or properly assessing and mitigating risks to their mental health, among other things.

Listening to children and young people across the EU

The EU's work on the protection of children and young people online does not stop at the desk of policymakers. Under the EU’s BIK+ strategy, young people themselves are being asked to help shape how digital safety works in real life. Every two years, the strategy is reviewed and progress assessed together with children and young people across Europe, giving them a direct voice to help shape the evolution of the online world. To learn more about the ongoing evaluation of the BIK strategy, visit the Better Internet for Kids portal.

As part of this commitment, the EU is leading the way in ensuring children and young people have a voice in decision-making and policy-making, as recently demonstrated in the context of the development of the DSA Art. 28 guidelines, where young voices from the Better Internet for Kids (BIK) youth programme and beyond have also taken centre stage, shaping the guidelines with their contributions and perspectives. By adopting a youth-centred and rights-based approach, the EU, through the BIK initiative, continues to set high standards for meaningful participation that empower the younger generation.

Looking ahead

The Digital Services Act aims to transform the internet, for the better. It focuses on safety, transparency, responsibility, and accountability. It contributes to ensuring that children and young people in Europe can enjoy everything the internet has to offer without being exposed to unnecessary risks, allowing them to feel safe, respected, and in control, whenever they go online.

If you need help or advice about something you’ve seen or experienced online, you can contact the Safer Internet Centre (SIC) in your country at betterinternetforkids.eu/sic.

The internet is a big part of everyday life for young people, whether they're learning, playing, creating, or simply staying in touch with friends. However, as digital spaces become increasingly central to how children grow up, it's equally important that these spaces are safe, respectful, and designed with their rights and needs in mind. That’s precisely where the Digital Services Act (DSA) comes in.

The Digital Services Act (DSA) is a groundbreaking piece of legislation that regulates the provision of online services within the EU, regardless of whether the provider is based in Europe or not. It covers all intermediaries, from large social media platforms like Instagram and TikTok to medium-sized online marketplaces, as well as search engines like Google and Bing. Its primary objective is to enhance online safety by preventing illegal and harmful activities and the spread of disinformation while safeguarding user rights and privacy. The DSA emphasises the protection of children and sets clear responsibilities for online platforms to ensure user safety and a fair digital environment.

In the booklet “The DSA explained”, the European Commission highlights, in family-friendly format, and in all EU languages, the most relevant aspects of the DSA for children and young people.

Putting children's safety, empowerment and participation first

One key objective of the DSA is to protect people under the age of 18. In particular, Article 28 of the DSA prohibits online platforms from presenting ads to minors that are based on profiling and requires platforms accessible to minors to take appropriate and proportionate measures to protect their privacy, safety, and security. Implementing such measures could mean, for instance,

- setting minors' accounts to private by default,

- modifying the platforms' recommender systems ('algorithms') to lower the risks of children and young people encountering harmful content,

- disabling features that contribute to excessive use, and

- implementing effective age assurance methods to reduce the risks of children being exposed to pornography or other age-inappropriate content.

In July 2025, the European Commission (EC) published guidelines to support online platforms that are accessible to minors in implementing appropriate and proportionate measures to protect children online as required under DSA Art. 28. The guidelines outline key actions to be taken by platforms across the EU to enhance the protection of minors more consistently and help companies in developing and adapting the services used by minors to fully respect their rights online.

Making the online world safer, easier to understand and navigate

The EC also released an EU age verification blueprint that provides the technical basis for a user-friendly and privacy-preserving age verification method across the EU. The blueprint is currently being tested and can be customised to national legal and language requirements. It is expected to be adopted and published as an app through the app stores by Member States and/or industry players.

This open-source technology aims to enable users to prove they are over the age of 18 when accessing adult-restricted content, without having to reveal any other personal information. It has been designed to be robust, user-friendly, and privacy-preserving, and will work together seamlessly with future European Digital Identity Wallets. It will technically also be possible to extend the age verification solution to other age limits.

The DSA also requires platforms to make their terms and conditions easier to read and understand, especially for younger users. This will help users to make informed choices about how they use platforms and what information they share online, among other things. The first evaluation of the European strategy for a better internet for kids (BIK+ strategy), which also involved children and young people, has shown that, so far, this practice is far from common.

Helping children and young people to report if something goes wrong online

If something goes wrong, such as seeing something inappropriate or experiencing online abuse, the Commission’s guidelines on the protection of minors under the DSA state that platforms should provide clear and simple ways to find support. Alongside these platform responsibilities, European countries are also stepping up efforts to tighten the safety net further, helping to keep children and young people safe online by establishing national complaint mechanisms.

The 2025 edition of the BIK Policy monitor, the annual policy review activity that investigates the implementation of the BIK+ strategy across EU Member States, Iceland, and Norway, shows that European countries are making good progress, too. Out of 29 countries surveyed, 27 have either established or are currently developing formal processes that allow individuals, including children and young people, and organisations to file complaints about harmful online content, cyberbullying and other safety issues. Two more countries report having other measures in place to help with these concerns (BIK Policy monitor report 2025, pp. 59-61). It is important to note that these national complaint mechanisms are designed to complement, not replace, the reporting systems for illegal content that online platforms are required to provide under the DSA.

Data from the 2025 BIK Policy monitor (dimension '2-BIK+ Actions', topic '1-Safe digital experiences', item '3.5-Complaints handling mechanism').

Children and young users should be able to find help quickly and know their complaints will be taken seriously. Entities that have been awarded DSA trusted flagger status may help providers of online platforms tackle illegal content faster, as trusted flaggers' notices of such content must be dealt with priority by online platforms and service providers. Many members of the EC co-funded Insafe-INHOPE network of Safer Internet Centres have already been awarded trusted flagger status. A continuously updated list of entities awarded trusted flagger status can be found European Commission’s website.

Applying and enforcing the rules

Of course, setting rules only matters if someone is making sure they’re followed. That’s why the DSA gives both the European Commission and national authorities (the Digital Services Coordinators, DSCs) new powers to supervise if platforms comply with the regulation.

At the European level, the EC is responsible for the supervision and enforcement in respect of providers of very large online platforms (VLOPSs) and very large online search engines (VLOSEs). For this purpose, it can, for example, conduct investigations, inspections, or request information from these providers. If they are found to break the rules, the EC can issue sanctions such as fines of up to six per cent of their annual global turnover.

Some companies have already made changes to comply. For instance, many platforms have started limiting how they show ads to younger users, while others have set the default settings for users under 16 years to private, meaning only approved contacts can view what they post.

At the same time, several platforms are also under formal investigation by the EC or the respective national authorities for failing to adequately protect children and young people or properly assessing and mitigating risks to their mental health, among other things.

Listening to children and young people across the EU

The EU's work on the protection of children and young people online does not stop at the desk of policymakers. Under the EU’s BIK+ strategy, young people themselves are being asked to help shape how digital safety works in real life. Every two years, the strategy is reviewed and progress assessed together with children and young people across Europe, giving them a direct voice to help shape the evolution of the online world. To learn more about the ongoing evaluation of the BIK strategy, visit the Better Internet for Kids portal.

As part of this commitment, the EU is leading the way in ensuring children and young people have a voice in decision-making and policy-making, as recently demonstrated in the context of the development of the DSA Art. 28 guidelines, where young voices from the Better Internet for Kids (BIK) youth programme and beyond have also taken centre stage, shaping the guidelines with their contributions and perspectives. By adopting a youth-centred and rights-based approach, the EU, through the BIK initiative, continues to set high standards for meaningful participation that empower the younger generation.

Looking ahead

The Digital Services Act aims to transform the internet, for the better. It focuses on safety, transparency, responsibility, and accountability. It contributes to ensuring that children and young people in Europe can enjoy everything the internet has to offer without being exposed to unnecessary risks, allowing them to feel safe, respected, and in control, whenever they go online.

If you need help or advice about something you’ve seen or experienced online, you can contact the Safer Internet Centre (SIC) in your country at betterinternetforkids.eu/sic.

- age assurance age verification digital identity protection personal data

Related content

- < Previous article

- Next article >